Rainfall Estimation from Satellite Images

(Rainfall Classification)

Deep Learning with U-Net++ and LSTM | Python, TensorFlow, Keras

Overview

This project aims to predict rainfall distribution from satellite imagery using deep learning architectures.

By leveraging satellite data from Himawari-8, the project explores how image-based models can replace costly radar systems for rainfall estimation.

The proposed method combines U-Net++ for spatial feature extraction and LSTM for temporal sequence learning to improve rainfall classification accuracy.

Background

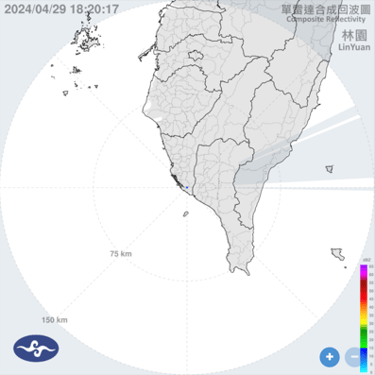

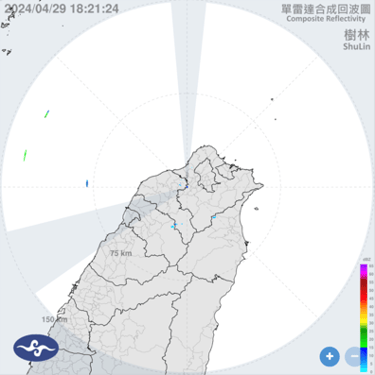

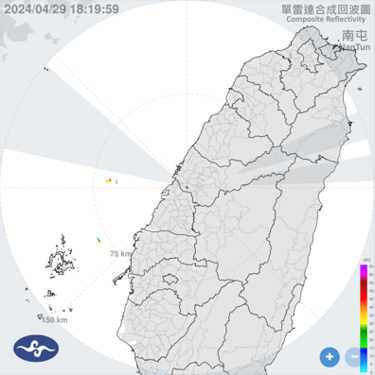

In Taiwan, meteorological radars are used to collect weather data; however, their coverage area is limited, and expanding coverage would significantly increase costs.

To address this, satellite imagery—particularly from Himawari-8, operated by the Japan Meteorological

Himawari-8

Himawari-8 captures multispectral images of the Asia-Pacific region every 10 minutes, using its Advanced Himawari Imager (AHI), which consists of 16 channels (3 visible, 3 near-infrared, and 10 infrared).

Researchers from the Department of Atmospheric Sciences, National Central University (NCU) utilize infrared channels (7–16) to analyze cloud physical properties and infer rainfall conditions.

Dataset

Source: Himawari-8 Satellite & Central Weather Administration (CWA), Taiwan

Coverage: Japan, East Asia, and the Western Pacific

Resolution: 500m–2km per pixel, 10-minute temporal resolution

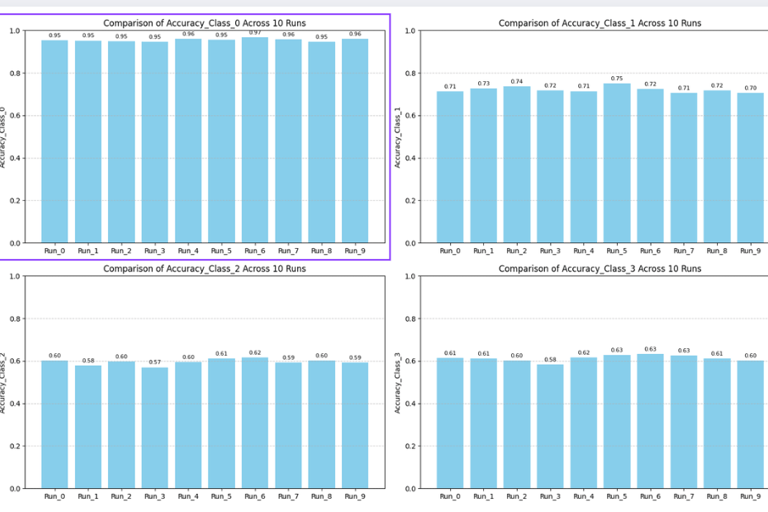

Classes:

No Rain (0–0.5 mm)

Light Rain (0.5–5 mm)

Medium Rain (5–15 mm)

Heavy Rain (≥15 mm)

Non-Taiwan regions are marked as NaN to exclude irrelevant data.

Methodology

Data Preprocessing – Filtering satellite images, generating rainfall labels, and handling missing data.

Model Architecture

U-Net++ for spatial rainfall pattern extraction.

LSTM for capturing temporal rainfall dynamics.

Model Training & Validation – Split into training and validation sets; optimized using IoU and mIoU metrics.

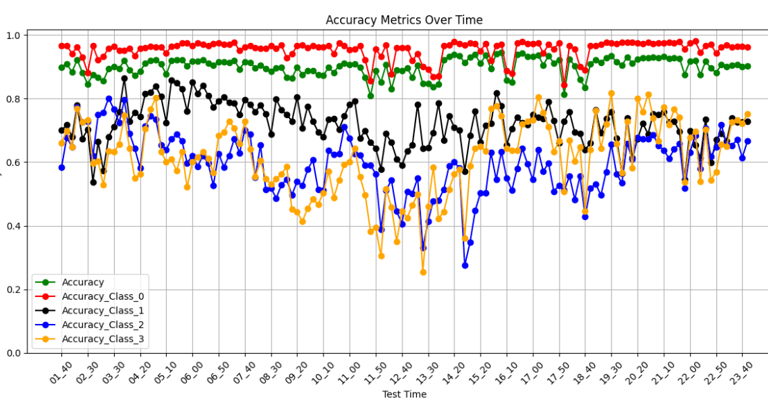

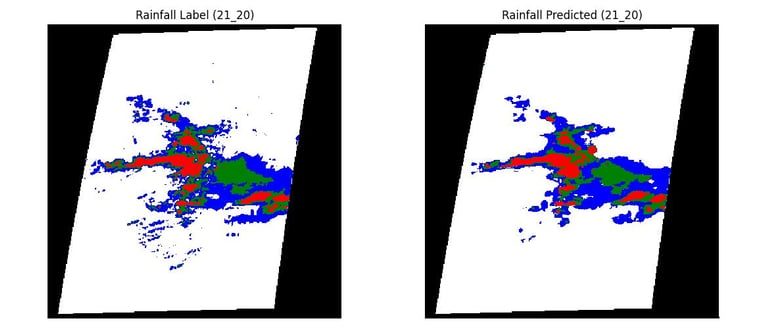

Evaluation – Assessed using Accuracy, IoU, mA, and mIoU to measure classification performance.

Key Results

The U-Net++ + LSTM hybrid model achieved higher spatial consistency and improved rainfall classification accuracy compared to baseline CNNs.

Demonstrated that satellite-based models can complement or replace radar systems, reducing operational costs for weather forecasting.

Visual rainfall predictions closely align with observed radar data across multiple regions in Taiwan.